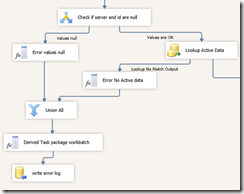

In a package developed with SSIS 2008 I had to log errors (such as missing data in records processed in the data flow) in a log table. In this data flow different types of error messages are generated and logged, while valid records are processed.further. In short, the valid data is processed and the users can have a look at the errorlog verify if any data was missing.

The errormessages differ but the packagename, taskname and some other data supplied to the errorlog is constant, so I used derived column transformations to generate the errormessage and merge to connect different error message outputs to the same derived column transform for taskname. Finally this data is written to the log. To merge I sometimes needed to sort the output before merging, which was a nuisance, because the order of the errormessages in the log for did not matter.

Each record from the data source undergoes some validation and additional data is looked up, if validation fails the a Dervied Errormessage is created (e.g.in No match). The errormessage and some other columns from the record are then sent through a sort and merged in the merge transformation. Each time a new erromessage is needed, the data flow is sorted and merged into the other error flow before task and packagename are added, and then the erroneous record is logged in an error log table.

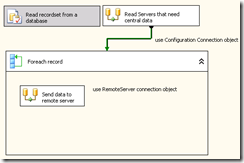

In SSIS 2012 the new Union All data flow transformation makes it easier to create this kind of data flow logic. The Merge and Sort can be replaced by a single Union All. Below is a sample with similar logic.

Another usefull property of ‘Union All’ is the ability to accept more than 2 inputs. Whereas with the merge transformation you needed 2 merge blocks for 3 inputs (as is illustrated in the 1st screenshot)