To create a new Team project folder in TFS, in which new solutions will be added.

Prerequisites

Visual Studio must be connected to a TFS server.

- In Visual Studio select menu Team

- Select connect to Team Foundation Server…

- In the Connect to Team Project, select the Team foundation server, do not select a project.

- click Connect

Procedure to create the TFS project

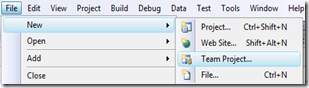

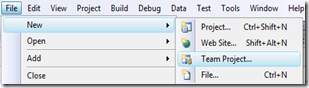

In VS 2008 file menu select New –> Team Project…

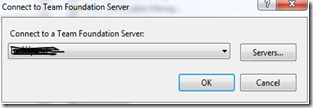

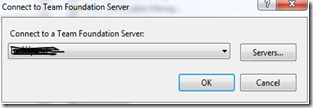

In the Connecti to Team Foundation Server, select the server that you have VS2008 connected to and click OK

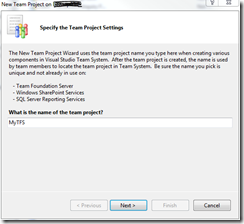

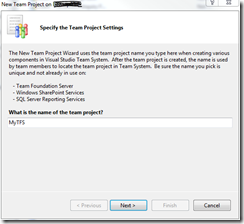

In the New Team Project on <servername> dialog, enter a name for the Team project (the name has to be new for that server), click Next

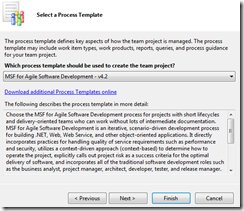

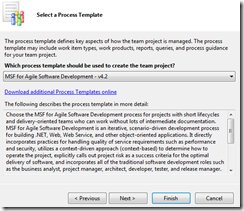

In the next step choose a Process Template (I’ve used the first one available). click Next

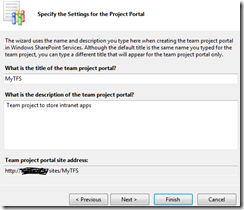

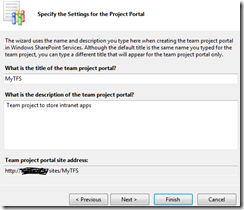

In the settings for the project portal, specify the title of the team project portal (I use the same as the Team project name). Add a description. Click Next.

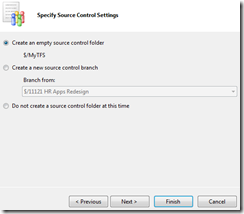

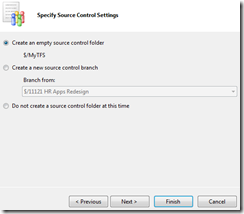

In the Specify Source Control Settings, select Create an empty source folder.

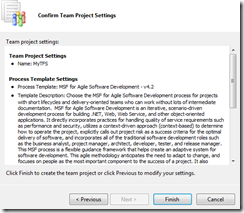

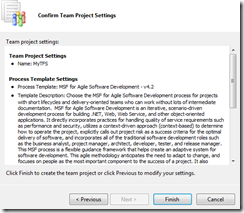

In the Confirm window, click Finish

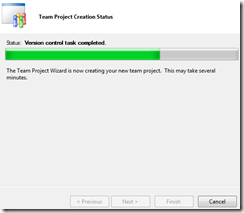

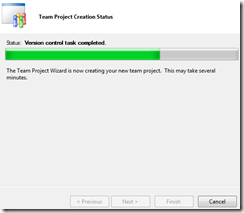

A progress bar is displayed until the project has been completely created on the TFS server;

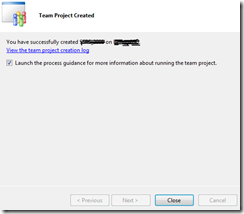

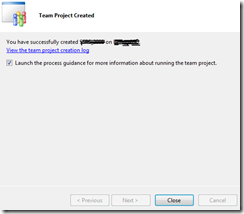

After a while (several minutes in muy case) the Created dialog appears, click on Close.

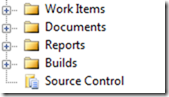

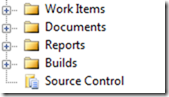

The TFS project is created with folders for Work items,… and Source control.

Add a Visual Studio 2008 Solution to the TFS project.

For simplicity I prefer to mirror my project structure under My Documents\Visual Studio 2008\Projects to the structure in the TFS project space. With Windows explorer I create a subfolder under the Projects folder with the same name as my TFS project. <MyTFS>.In this subfolder I will create new solutions and projects.

Create a solution and a project

With Visual Studio 2008 I create a new blank solution called MySln in the MyTFS subfolder. to this solution I add a new project MyPrj.

Once In TFS the result will be similar

Create a Source control Workspace

Before we add a solution to Team project, a new workspace has to be created with the Source control Explorer. Open the Workspace dropdown list and select Workspaces… at the bottom of this list.

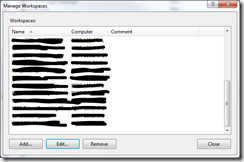

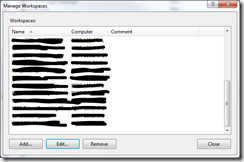

This opens the ManageWorkspaces dialog. Click on the Add… button

In the Add Workspace dialog, give the Workspace the same name as the TFS project. In the first row of Working folders, set the status to 'Active. Set the Source control folder to the MyTFS folder. Set the local folder to My Documents\Visual Studio 2008\Projects\MyTFS

After a short while the workspace is created.

In the Source Control Explorer select the workspace you have just created. (Important, do not forget this before the next step)

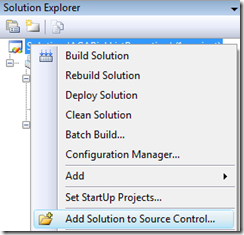

Add visual studio solution to the source control

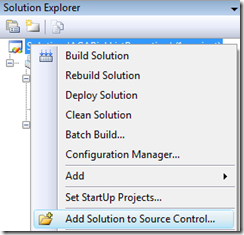

In the solution explorer, right click on the solution and select Add solution to Source control…

The solution will be added into the TFS project of the currently selected. The source files are not checked in yet, all files in the solution have a little plus sign in front of them, meaning that they are new and not yet checked in. Right click on the solution in the solution explorer and select 'Check in…

In the check in dialog, leave all checkboxes as checked and click on the Check In button. A progress bar is displayed while the files are being checked in. All the files now have a small lock in front of the name, meaning they have become read-only on your pc and they are checked in on the source control server.