Analysis Services Translations

Analysis Services (SSAS) provides an easy translation mechanism for a richer end-user experience. SSAS Translations allows for the translation of the captions of dimensions and facts as well as the translation of the content of dimension name columns. To easily show the cube in his preferred language the end-user has to use a tool that makes use of SSAS Translations. MS-Excel 2013 is such a tool. Depending on the installed display language of MS-Excel and provided the cube has a translation for the display language the cube and dimensions will automatically be shown in the default display language. Provided the necessary language packs for Office are installed, end-users can change the display language and create MS-Excel reports in the language they need, as long as the SSAS cube provides translations in the display language in MS-Excel.

Setup dimension tables with different languages in SQL-Server

Every dimension table that has name columns to display the dimension members, should have additional name columns for every language end users request. There is always a default name column.

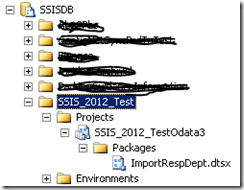

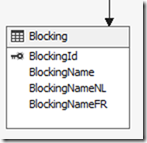

For instance: a dimension table called DimBlocking

CREATE TABLE [dbo].[DimBlocking](

[BlockingId] [int] NOT NULL,

[BlockingName] [nvarchar](50) NOT NULL,

CONSTRAINT [PK_DimBlocking] PRIMARY KEY CLUSTERED

(

[BlockingId] ASC

) ON [PRIMARY]

) ON [PRIMARY]

This dimension has a key column ‘BlockingId’ and a name column ‘BlockingName’. This name column will serve as the default name column in the SSAS dimension specification.

This table example has 2 rows of data

BlockingId |

BlockingName |

0 |

Non-blocking |

1 |

Blocking |

To provide for other languages like Dutch and French, just add name columns of the same type and size as the default name column, e.g. BlockingNameNL for Dutch and BlockingNameFR for French.

CREATE TABLE [dbo].[DimBlocking](

[BlockingId] [int] NOT NULL,

[BlockingName] [nvarchar](50) NOT NULL,

[BlockingNameNL] [nvarchar](50) NOT NULL,

[BlockingNameFR] [nvarchar](50) NOT NULL,

After adding the translation columns:

BlockingId |

BlockingName |

BlockingNameNL |

BlockingNameFR |

0 |

Non-blocking |

Niet-blokkerend |

Non-bloquant |

1 |

Blocking |

Blokkerend |

Bloquant |

Setup MS-Excel to use the different languages provided by a cube in SSAS

Office Language preferences

By default MS-Excel is installed with one Display language. This can be verified in the Options dialog window of MS-Excel, under the Language tab. In the screenshot below, Office 2013 was installed with English Display language.

Install additional language packs from an Office language pack as needed. There are a few considerations when making translations for a country like Belgium where ‘Dutch (Belgium)’ is installed as Windows language, but the language pack for Office 2013 only offers Dutch from The Netherlands: ‘Nederlands (Nederland)’. Take care to setup SSAS translations to match the name of the Display language and not of the Editing language.

Setup dimension translations for different languages in SSAS

Data Source View

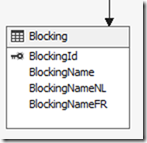

Make sure that the Data Source View is refreshed to read the metadata for the new name columns. If your dimension table is based on a query, then adapt the query to read the new name columns. The table DimBlocking has been given the Friendly name ‘Blocking’.

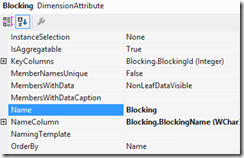

Dimension designer - Structure

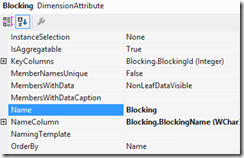

The dimension has a key and a name column. Most often a simple dimension with a key and a name has one Attribute, in this example called ‘Blocking’ with KeyColumn = Blocking.BlockingId and NameColumn = Blocking.BlockingName.

Dimension Designer – Translations

In the Translations design, we can add a new translations for the captions, for the Attributes and also for the [All] and [Unknown] members.

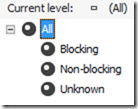

Preparation to translate [All] and [Unknown] members

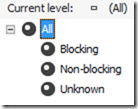

The captions of these 2 members appear by default when browsing a dimension:

To make [All] and [Unknown] translatable we can explicitly specify them in the Dimension properties.

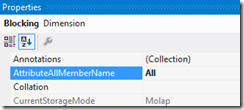

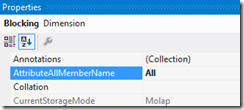

In the Dimension Structure design, select the Dimension in the Attributes Pane, and look for the following 2 Dimension properties:

- AttributeAllMemberName: set this to ‘All’ to enable translation. (Or use another word, e.g. Everything)

- UnknownMemberName: set this to ‘Unknown’ to enable translation (Or use any other word, like ‘Unidentified’)

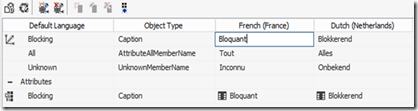

Add Translations

In the Dimension Designer go to the Translations tab.

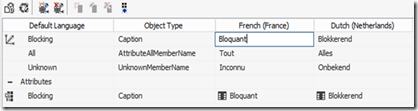

The Dimension properties that have translatable captions:

- Caption

- AttributeAllMemberName

- UnknownMemberName.

And for the Attributes:

Click on the ‘Add new translation’ button

The Select language dialog box opens:

Select the language needed and make sure you have an exact match with the display language installed in MS-Office. E.g. choose ‘Dutch (Netherlands)’ when the Office 2013 language pack for ‘Nederlands (Nederland)’ is installed. Because I have a Dutch Office 2013 installation, the display language is shown translated by MS-Office.

For the Dimension Caption and Member names, type text in the Translation column.

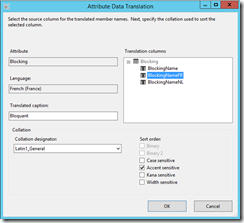

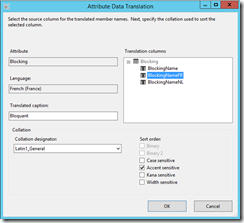

For the Attribute translation, there is a translation for the caption, but here you can select a column name to use as translation for the attribute data. Click inside the translation column and you see a button. Click on the button to open the Attribute Data Translation dialog window. Enter the Translated caption and select the Translation column from the dimension table.

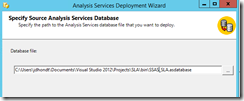

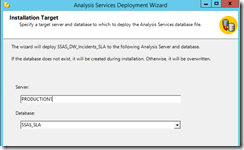

Save and deploy this dimension, make sure it is processed.

Connect MS-Excel to the SSAS cube

Use the default display language

The Dutch version of MS-Excel is setup to use ‘Nederlands’ as display language:

In the spreadsheet, connect to the Analysis Services Cube and create a new pivot table. The Pivot table fields shows the Blocking dimension with translation.

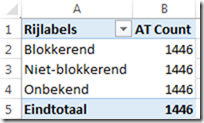

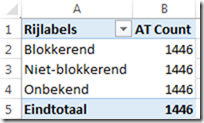

After selection of this dimension in the Rows section, the pivot table shows the Attribute data translated in Dutch.

Excel row 2, 3 and 4 show the translated members of the ‘Blocking’ attribute.

MS-Excel automatically translates ‘Row-labels’ and ‘Grand total’ as ‘Rijlabels’ and ‘Eindtotaal’. This is not a function of the SSAS translation.

Change to another display language

- Change the Display language to French.

- Close and restart MS-Excel.

- Open the same spreadsheet.

- In the Data section, click on the Refresh button.

- The pivot table is now shown with French captions and values.