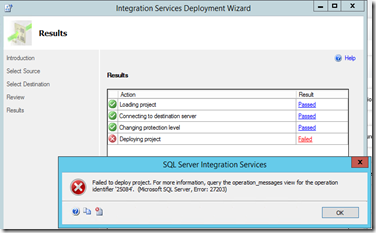

When an SSIS project is deployed from SQL Server Data Tools to the Integration Services Catalog, the Project and Package connections and parameters are stored together with the project and the packages. Each parameter or connection property in de Catalog is reconfigurable by means of environments. With environments properly setup, developers do not need to worry about changing connections or parameters when they release an upgrade to the Production server. Because the production server environment will automatically replace the test values in the connections and in the parameters by values setup in the production environment.

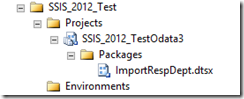

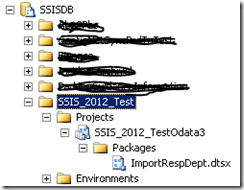

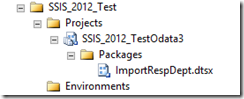

Catalog folder after 1st time deployment

An example of a project in the catalog with 1 package. No Environments have been created yet.

Create Environments

In this example we will create 2 new environments with a sql script: Test and Production.

---- create environment

EXEC [SSISDB].[catalog].[create_environment] @environment_name=N'Production', @environment_description=N'', @folder_name=N'SSIS_2012_Test'

GO

EXEC [SSISDB].[catalog].[create_environment] @environment_name=N'Test', @environment_description=N'', @folder_name=N'SSIS_2012_Test'

GO

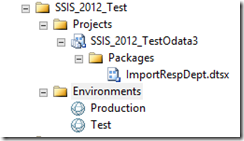

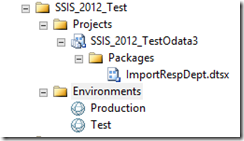

After the script is executed: the 2 new environments are visible in the Environments folder.

Create environment variables

For project parameters

There is a project parameter in the SSIS project ‘DaysToLoad’ with value 15. This is shown in the project configuration, right-click on the project and select ‘Configure…’

Or with a T-SQL script, create an environment variable ‘prjDaysToLoad’ in both environments:

DECLARE @var int = N'30'

EXEC [SSISDB].[catalog].[create_environment_variable] @variable_name=N'prjDaysToLoad', @sensitive=False, @description=N''

, @environment_name=N'Production', @folder_name=N'SSIS_2012_Test', @value=@var, @data_type=N'Int32'

EXEC [SSISDB].[catalog].[create_environment_variable] @variable_name=N'prjDaysToLoad', @sensitive=False, @description=N''

, @environment_name=N'Test', @folder_name=N'SSIS_2012_Test', @value=@var, @data_type=N'Int32'

GO

Add a reference to the environment in the reference

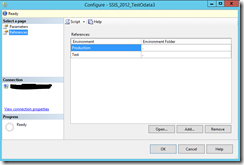

The project needs to be made aware of the available environments first.

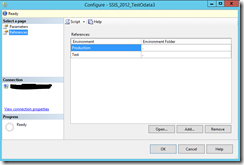

You can do this by right-clicking on the project name and select ‘Configure…’ and add the reference in the References panel. Or you can do it with a T-SQL script

Declare @reference_id bigint

EXEC [SSISDB].[catalog].[create_environment_reference] @environment_name=N'Production', @reference_id=@reference_id OUTPUT, @project_name=N'SSIS_2012_TestOdata3', @folder_name=N'SSIS_2012_Test', @reference_type=R

EXEC [SSISDB].[catalog].[create_environment_reference] @environment_name=N'Test', @reference_id=@reference_id OUTPUT, @project_name=N'SSIS_2012_TestOdata3', @folder_name=N'SSIS_2012_Test', @reference_type=R

GO

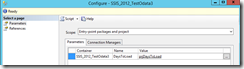

The result in the configure dialog window looks like this

Create an environment variable in production and test environment

---- create env variable in the 2 environments

DECLARE @var int = N'30'

EXEC [SSISDB].[catalog].[create_environment_variable] @variable_name=N'prjDaysToLoad', @sensitive=False, @description=N''

, @environment_name=N'Production', @folder_name=N'SSIS_2012_Test', @value=@var, @data_type=N'Int32'

EXEC [SSISDB].[catalog].[create_environment_variable] @variable_name=N'prjDaysToLoad', @sensitive=False, @description=N''

, @environment_name=N'Test', @folder_name=N'SSIS_2012_Test', @value=@var, @data_type=N'Int32'

GO

See the result in the ‘Production’ environment

Reference the environment variable from a parameter in the Project

or do it with a T-SQL script

---- reference the environment from the project parameter

EXEC [SSISDB].[catalog].[set_object_parameter_value] @object_type=20, @parameter_name=N'DaysToLoad', @object_name=N'SSIS_2012_TestOdata3', @folder_name=N'SSIS_2012_Test', @project_name=N'SSIS_2012_TestOdata3', @value_type=R, @parameter_value=N'prjDaysToLoad'

GO

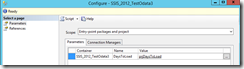

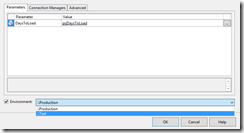

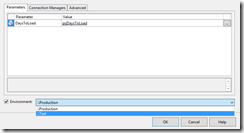

This is the result

Be careful: The name of the environment variable needs to be identical in both environments

Run the package manually from the Catalog with a reference to the environment

When a package in this project is run we add the reference to one of the environments ‘Production’ or ‘Test’.

Right-click on a package to Execute. The execute package will warn that no environment is selected for the parameter that has a reference:

Check the Environment checkbox and select one of the environments

When OK is clicked the package will use the value for the parameter that comes from the selected environment.

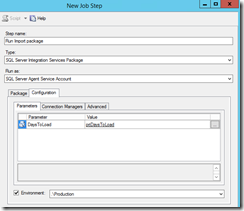

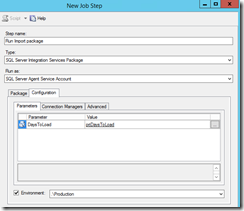

Run the package in a SQL Server Agent job with a reference to the environment

In a job step the procedure is similar, select the package and check the environment checkbox and select one of the environments.

Conclusion

Once SSIS projects are deployed from a developer environment to a production environment you do not need to change parameter or connection settings in your Visual Studio project before the deployment. In the Integration Services you can set up one or more environments with variables for parameters and connections that differ from the developer environment. The developer has no worries about deploying in the production environment (either directly or through a deployment script), because the DBA administrator can setup up an environment in the Catalog so that the packages connect to databases and use parameters adapted for this environment.

In a production environment I tend to create only the ‘production’ environment and on a test or QA server I create only the ‘test’ environment. But you can still use several different environments in a production environment the package may need to address ETL operations on several production servers.